This is an article I wrote for the publication accompanying the conference Research in Graphic Design at the Academy of Fine Arts Kattowice where I gave a talk on the subject in January 2012. Please excuse the lack of illustrations. I will try to add some later, but usually those are empty promises as you can see in other posts on this site. Reading time ca. 16 minutes

It is a recurring phenomenon that we tend to sort what comes in large amounts, to be able to grasp it, for quicker reference, and to find it again more easily. Once organized you don’t have to look at everything all the time but only consult the parts of your current interest. The vast world of type is a prime case. Grouping typefaces also breaks down the process of identifying them into a less challenging task.

Any categorization covers three aspects: 1. sorting into groups (this is what scholars and historians do, also type manufacturers), 2. referencing (educating) and 3. “taking out” or finding (this is what the user usually does). The aspect of finding a typeface though is crucial to many more people, every day, than the act of classifying them. You sort your CDs once and then only look at the respective shelf when you want to listen to Jazz for example. This is why I think a (more) useful classification is one that helps the user to find and select typefaces and which is structured accordingly.

What happened?

Assigning names to typefaces and classifying them is a rather new occurrence in our 560 years of type. In the beginning, i.e. the first 400 years of typography, typefaces didn’t even have specific names. Foundries and printers called them by their size (which actually were names like “Paragon”, “Great Primer”, “Nonparaille”, not numbers of units). All type looked more or less the same anyway and was suitable for more or less the same jobs – continuous text. If a printer had more than one version of a roman text face available they numbered them, e.g. “Great Primer Roman No.2”.

Then the industrial revolution happened and with it came the wish for louder and more eye-catching typefaces than regular Bodoni at 24 pt. Plenty of flashy new designs were invented, large jobbing type and numerous variations in style were starting to become available. With this, people saw the need to give the novel things names to communicate about them. But which? Most typefaces weren’t based on historic models you could derive terminology from.

So type foundries all invented their own, more or less arbitrary designations for their new styles, e.g. “Egyptian” (because everything Egypt was super en vogue after Napoleon came back from his campaign), “Gothic” or “Grotesque” for sans-serif typefaces (because this new alien style seemed weird) or “Ionic”, “Doric” and “Antique” for slab-serifs. Not only the designs were becoming more creative and individual but also the terminology, resulting in the problem that genre names were not universally understood anymore. Terms were determined by marketing, not by appearance or historic roots.

Still, the actual typefaces themselves were not given individual names like today. A foundry rarely had more than two or three “French Clarendons” on offer and an easy solution was to just to continue to number them.

Until around 1900 only the slightest to no attempts where made to classify typefaces. Rather it was considered “redundant, impossible or utterly inconvenient”. One of the earliest endeavors was the system proposed by Francis Thibaudeau in 1921. It is solely based on the form of the serifs (as later did Aldo Novarese in 1964), which I regard less ideal, but up until this stage in type history, it admittedly was a characteristic feature distinguishing the different style periods rather fittingly. [schemes for “Uppercase” and “Lowercase”]

By the mid 20th century however, with new type issued weekly, it became increasingly difficult to keep up with the developments and to obtain a working knowledge of the countless variants known. For the first time classification was regarded as a problem and serious efforts were made to establish a systematic approach to sort typefaces and to come up with an international solution.

The Thibaudeau system was developed further by Maximilian Vox (born Samuel William Théodore Monod) who published his version in 1954. Continuing with the same main groups as Thibaudeau, Vox’s unique contribution were terms for groups derived from the names of the most iconic printers / examples (Garalde, Didone) or techniques (Manuale).

The Vox-system was – slightly modified – taken over by the ATypI (Association Typographique Internationale) in 1960 and later internationally adopted as a standard. Adapted versions were published by the German DIN in 1964 and as a British Standard in 1967.

The limitations of those systems

An ever growing market for typefaces and countless new variants in style show that the old systems like Vox put too much emphasize on the historical order and the early seriffed typefaces. At the same time they generalize greatly when it comes to sans and slabs. This is understandable when we consider the age they were created in. The popular and influential neo-grotesks of the late 1950s like Helvetica and Univers weren’t even issued back then and the international style – and with it the surge of sans-serif type – was just starting to take off.

The original idea of Vox was to enable the combination of different groups and terms, for instance to have a Garalde sans-serif (= humanist sans). This alas was never really implemented apart from variations in the British Standard and additional explanatory text for the DIN classification. A similarly overlooked detail is that ATypI originally suggested the simple structure to be subdivided further by their members, the different countries, to their liking. ATypI also did not define the terminology since that was the part especially hard to agree on. Instead they assigned numbers to each group to allow comparison and left the translation of terms to the authors of the international adaptions.

Unfortunately, those ideas are largely forgotten. In fact, now with a fully international market and type community we see that it is exactly the diverse terminology that is the biggest cause of confusion. Neither the terms coined by the type foundries nor the ones used in published classification systems are anywhere near being internationally compatible. For example the French call sans-serif faces Antique, the Germans Grotesk, the Americans Gothic which on the other hand is the term for blackletter in European countries.

Unambiguous terminology might now be even more important than a coherent, rational approach to sort typefaces. Because before we even attempt to achieve an agreeable classification, we have to be able to communicate about type and letterforms with all parties involved – designers, printers, compositors, students, manufacturers, scholars, engineers and perhaps even laymen.

Two (new) different ideas

There are two different approaches of classifying which I regard more practical.

1. Classification according to form model

This is an idea based on the writing and letter theories of Gerrit Noordzij which I first put together after learning calligraphy and typeface design in the Netherlands. It doesn’t follow Noordzij’s terminology exactly but his inspired me in the search for more “generic” terms, not connected to a certain style period, because I found that the historic ones can cause quite some confusion among beginners. What makes a brand new font an Old Style typeface and one from 1790 a Modern? Or what does Humanist, Renaissance and Garalde mean here anyway?

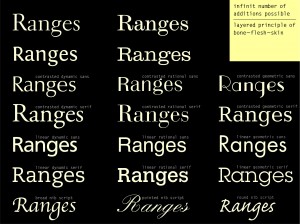

I expanded Noordzij’s theories into a layered system comparable to “bones, flesh and skin”. Most text typefaces can be differentiated according to a small number of basic form models. You could call these the bones or skeletons of a typeface. Those principles of form are largely determined by the former writing tools – e.g. the broad nib or pointed nib – and how the stroke contrast originally came into being.

- dynamic (humanist) form model: forms, contrast and structure, derived from writing with a broad-nib pen. Noordzij calls this type of contrast translation.

- rational (modern) form model: forms derived from writing with a pointed pen = expansion

- geometric form model: rather drawing the linear skeleton form with a round speedball pen, like in Futura = no contrast

These three models, the underlaying structural principles, are also visible in the letterforms when you reduce the stroke contrast or remove the serifs. They determine the impression and application of a typeface to a very large extent. Of course, a beginning designer doesn’t understand terms like expansion or broad-nib pen any easier than French Renaissance. But what most of us can agree on is the description and general appearance of the character shapes:

- Writing with a broad-nib, held at a consistent angle, delivers an inclined course of contrast, open apertures and divers stroke width. This gives the letters a dynamic and varied general form and feel (also in the italics and caps, which follow the proportions of the Capitalis).

- In writing with a pointed pen, the thickness of the stroke is related to the pressure put upon the nib during a downstroke, while other strokes remain thin. The axis is vertical with high but less modulated contrast and rather closed apertures. This gives the letters a more static, stiff impression. The letterforms (e.g. q, p, d, b) and their proportions are rather similar, especially the width of the caps.

- The round nib renders linear, more “drawn”-looking, constructed forms (e.g. circular o) like in Futura or monoline scripts. Caps often follow the classical proportions of the Capitalis.

The second level – the flesh – is about the equipment and features applied to the skeleton of a typeface. Those are serifs and stroke contrast, either strongly visible or just a slight contrast to achieve the impression of optical linearity. The actual form of the serifs – triangular, bracketed or straight – is not as determining to me as it was for Thibaudeau. One can incorporate these specific differences into the third “layer” of descriptives.

The third, the skin level, gives us the possibility to introduce an infinite number of finer differentiations between the main groups of typefaces to describe even the most singular feature someone could ever look for. Descriptives can address different forms of serifs, like bracketed or straight serifs in traditional rational serifs like Scotch Modern and Didone, or ornamented ones. Also decorative features like stencil, inline, shadow are possible, or terms related to genre or application like western, horror, comic or agate, typewriter, low-res. This detailed graduation can also be seen as a collection of tags.

With this set at hand, all kind of typefaces can be easily described by combining the terms of each groups, just like Vox imagined it, too. A Tuscan typeface for example could be characterized as a face with modern skeleton, little stroke-contrast, bi-furcated serifs, western-style, chromatic, poster, decorative, shadow, display and so forth. Okay, this is probably not the unique, catchy term most of us would like to have at their disposal for type genres, but they describe the typeface appropriately.

The big advantage I see in this system is that the groups relate to the impression and, to some extent, the use of the typefaces. It is relatively easy to assign atmospheric keywords to the form models, like warm, open, friendly to the humanist model and rather regular, strict, formal to the rational form model. This helps the selection of typefaces enormously, because the impression and atmosphere you want to achieve is usually what you think of first when you start looking for a typeface. At least I do. Also, it aids combining typefaces as all fonts that stand in one vertical column here combine well and harmoniously, whereas mixing the horizontal neighbors is more tricky. If you are looking for a more contrasting combinations you can pair the typefaces diagonally. So, either stay in one form model or go for lots of difference.

This system was published in several German speaking reference books, including mine, and since then relatively widely in use in Germany. However, it is not flawless and sometimes difficult to adapt for real-life applications. The terminology stays my main construction site. Do people actually understand what is meant by “dynamic” and “static”? The latter was my replacement term for the initial “rational” but right now I tend to prefer “rational” again, because I have a hard time describing a rationalized english roundhand or modern italic as “static”.

Also, one could argue that the problem with any taxonomical approach is, that a typeface can only be “one of those things” even if we think of it more like piles or fraying clusters and less of self-contained drawers. It’s not realistic to say that a typeface can only be serif or sans given the numerous semi-sans and semi-serif examples today. Similarly do we know typefaces which happily live in the middle of the humanist and the rational form model. So, where to put those? I’d advocate to place them on the play-board near what determines the feel of the typeface most, even if we give up immaculate grouping for that. An alternative would be to introduce more piles or to find a way to assign a typeface to more than just one group or descriptive, like what you can do in a database environment.

One would think that an interactive system solves exactly this problem but actually the adaption for FontShop’s FontBook and FontShuffle applications I worked on was rather tricky. My system works surprisingly well as a simple list, because it brings the (coincidental) chronological order of the first few groups out more clearly. It works okay in a matrix, especially because you can change the axises (form models in horizontal order or vertical) and “enter” it from different directions. But comprehensibly sorting over 7500 typefaces from the FontShop catalog into a customized classification I made for their iPad app was a challenging acid test. The main reason for my problems was the set-up of their database though, which only allowed typefaces to be assigned to one group. This ultimately proved me that the world of type is not as simple (anymore).

2. Micro-Classification or tagging

A possible solution to this problem and another approach I grew very fond of in the last years is the micro-classification you can call tagging. It is at first a non hierarchical approach, which makes it far more flexible and user-centred, often even user generated. You could call it a democratic take on classification. If people subjectively regard this typeface as “holiday” or “girlish”, then why not have them find the typeface with those keywords. The problem with tags added by users, and also marketing people, though is monitoring. I did this voluntarily for MyFonts extensively in the past (besides tagging typefaces) and was just stunned at times by the silly and ridiculous tags that some assigned to fonts.

Tagging works more or less only in a (interactive) database environment. The most comprehensive example for this might be the MyFonts.com website, but also other type vendors work with a similar, more or less successful, system. A good browsing and search interface is crucial, as you can see in the example of fonts.com with its long, unstructured list of keywords. What is most confusing on there – on a page they call “classification” – are keywords like “serif”, “script” or “simplified chinese” next to “scary” on the same level. In my opinion, it would be practical to offer tags in a basic hierarchical order as an entry point to all those different styles of typefaces (different “levels” of keywords). For example displaying “serif” as a different level tag than “holiday”. Speaking with several type manufacturers though I got an additional view. Some told me that sales went up significantly after they added more tags, more informal tags that is. So, what to do if people apparently find the typefaces they want this way. Should you force educate them, force your classification on everyone if it may not even be helpful to them?

The biggest issue in an international tag-system however is the language, or again, terminology. French users might want to tag or look-up sans-serif typefaces under the term “Antique” while the search brings up a list of decorated slab-serifs (see ambiguities mentioned before).

Stepping back

As I am busy with this topic for 14 years now I get really desperate at times. I can understand why my predecessors didn’t want to continue to bother at some point and why the discussion is preferably avoided at conferences. Although I had intensive experience from teaching and earlier tests, I was hoping to find some new clues in a small research. What are the more “weighty” characteristics? How do people distinguish typefaces?

Well, to cut a long story short, it was not as fruitful as I had hoped and just brought up what I already knew or suspected.

I confronted students and friends of different level of knowledge with a pile of type samples and let them sort those into groups however they wanted. After that I asked them to assign names to their groups. To break you the most disappointing outcome first – this last task did not bring up anything at all. They had a very hard time to name the groups. Students with some knowledge used the existing terminology, blending all systems they know of, i.e. called some dynamic or static, used Vox-names for other groups or the traditional German (DIN) terminology. The ones who did not have any education in typography were able to describe what they saw and sorted, but couldn’t come up with a single, catchy term. Well – what did I expect. This is not surprising at all.

What was verified is that they separated script or decorated faces from text faces first. Secondly they separated serifs and sans. As a third – and actually more pronouncedly than I thought – they separated typefaces with stroke contrast from linear ones. Even to the extent that some separated fonts that are supposed to look linear, thus with just small optical adjustments, like in Univers or Bureau Grotesque. My guess is that this was because they didn’t have other criteria at hand, e.g. weren’t familiar with the idea of form models for further distinctions. The form model was – not surprisingly – the most advanced, hence most difficult thing to recognize. It is obviously a fact that distinguishing typefaces must be learned.

“Unfortunately, many researchers in type classification become so involved they forget the basic purpose of any attempt to formalize a structure: simple communication.” — Alexander S. Lawson

Conclusion and outlook

The problem with research in any field is that you dive into a subject on such specialized and detailed level that you forget that your distance to the language and knowledge of the normal people gets greater and greater. It helps to step back every now and then and ask the actual user. A classification should help them to find, select and combine typefaces, and not the scholar in the first place. Or at least this is what I find is lacking right now. The historically savvy expert has sophisticated language and methods to describe letterforms of the past and maybe even present. But I, too, sometimes forget that others don’t easily see those unique features in typefaces that I can make out in seconds. I want to find a tool that also helps entry-level users of type to recognize the differences and similarities among typefaces and find clues about their potential use.

My hope is to be able to combine all those different approaches of classifications into a flexible system that works on several levels of sophistication – for beginners and experts. We cannot abandon all old systems, and even less so, the different terminology established over the years. We have to come up with a way to integrate all this and explain it comprehensibly.

My proposal works well with most of the traditional groups of text faces and it follows the historical order in the serif categories. At the same time it is open to new additions to the typographic palette. One can easily incorporate different levels of descriptives: form model, main formal features (serifs, contrast), and details and associative terms. The third level could work as a user-centric tag collection. In a database environment all those level of descriptives would be assigned as tags anyway, just differently displayed in different user scenarios. Because the main illusion I/we have to give up is to think a typeface can only be “one thing” – either sans or serif, either Old Style or Modern. Groups of typefaces shouldn’t be pre-filled buckets anymore, but rather a customized set of fonts at my disposal when I select “serif”, “rational” and maybe other key words.

The challenge now is to translate a collection of tags into a versatile visual form that can be used in teaching, talks, and publications, displaying the different levels of descriptives. Likely, the exact visualization will be different each time and adapted to the specific task. But what we do need is a common understanding and a common language to know what we are talking about.

Hallo Frau Indra Kupferschmid,

dies ist ein sehr interessanter Artikel zum Thema „Schriftordnung“. Leider ist mir das vollständige Vergnügen ein wenig durch meine mangelhaften Kenntnisse der englischen Fachterminologie verwehrt, aber ich glaube die wesentlichen Aspekte verstanden zu haben.

Meine Meinung zu dem Thema ist folgende: Wie ihre Darstellung vergangener Ordnungssysteme sehr schön anschaulich gemacht hat, haben alle Ordnungssysteme mehrere Probleme, die nie zur völligen Zufriedenheit gelöst werden können.

Zunächst repräsentieren alle diese Systeme in erste Linie den Vorstellungshorizont desjenigen oder der jenigen, der sie entwickelt hat. Daher wird es nur eine mehr oder weniger große Schnittmenge mit dem Vorstellungshorizont anderer Menschen geben. Nur diese werden das System verstehen, für gut befinden und anwenden.

Dann sind alle diese Systeme zu einer bestimmten Zeit oder vielleicht besser in einer bestimmten Situation entstanden. Sie verfolgen entsprechend eine Absicht. Ändert sich die Situation oder vergeht Zeit, verliert das Ordnungssystem seine Berechtigung.

Was vielleicht auch eine Rolle spielt, ist der Umstand, dass jeder Mensch unterschiedlich empfindet. Was für den einen schon eine extreme Abweichung darstellt ist für einen anderen nur eine leichte Variation.

Aus dem vorangegangenen Darstellung ergibt sich die recht gewisse Vermutung, dass jedes System eine Halbwertzeit hat. Die mag mal kürzer oder länger sein, aber sie ist gewiss. Und dann wird es nur ein weiteres Systemupdate geben. Aber niemals eine endgültige Lösung.

Für die praktische Anwendbarkeit und Auffindbarkeit von Schriften ist es daher vielmehr wichtig ein überall und zu jederzeit aktuelles Werkzeug zu schaffen, das unabhängig von Sprache und Kassifizierung Schriften abbildet und zueinander in Beziehung setzt. Schriftsuche ist eine Sache der Augen. Man schaut sich Muster an und sagt ja oder nein dazu. In beiden Fällen muss ich Möglichkeiten haben, die Richtung meiner Suche zu steuern. Vielleicht mit Reglern wie das bei den Multiple Master Fonts geschah. Man entwirft eine virtuelle Ideal-Schrift und schaut was das Werkzeug an vergleichbaren bereits gestalteten Schriften findet. So ein Werkzeug und international gültige Kriterien für die Erstellung von Font-Dateien würden die Nutzbarkeit vorhandener Schriftentwürfe deutlich weiter bringen, als jede neue Schriftklassifikation.

Ich stimme Ihnen voll und ganz zu und ein solches System, oder eher back-end, fände ich sehr wünschenswert (und es existieren auch Versuche dazu). Jedoch gibt es immer wieder auch den Fall, in dem man abstrakt, un-interaktiv, über die Unterschiede und Variablen von Schriften sprechen möchte. Auch die Benutzer eines von Ihnen beschriebenen Systems müssen dieses erst erlernen. Verwendet man als Suchbegriffe Wörter, die allgemein verständlich sind, wie etwa »elegant«, »männlich« oder »steif«, finden Laien vielleicht leichter den Einstieg. Und wie gesagt funktioniert das Verknüpfen und die Suche nach einer Schrift mit Schlagworten nur in einer Datenbankumgebung.

Meine Übersicht (ich bin noch nicht dazugekommen sie zu posten) ist somit nur eine Darstellung für einen von vielen mutmaßlichen Zwecken: vor allem die Verwandtschaft von Schriften aufzuzeigen, über die offensichtlichen Unterschiede wie Serifen und Kontrast hinaus. Das ist das, was ich Formprinzip, oder in Englisch form model nenne. Andere Klassifikationen, die ich auf Basis dieser Idee erarbeitet habe sehen anders aus.

Ideal wäre, wenn wir Schriften mit Schlagworten für die von mir vorgeschlagenen drei »Schichten« versehen könnten, also z.B. mit »Serifen, Kontrast, statisch, Kehlung, tropenförmige Strichenden«. Dann könnten man nicht nur jeweils eine passende Liste, sondern auch einfach die passende Klassifikation für den jeweiligen Fall »ausgeben«. Für Laien vielleicht nur eine Unterteilung in Serifen, Serifenlos, Serifenbetont usw., für die Fachleute kann das Formprinzip dazu und man kombiniert dies eventuell mit einer Schlagwortliste, die sowohl spezielle Fachbegriffe enthalten kann wie »monospaced«, », als auch Details beschreibt, oder eben tags enthält wie »Sommer«, »hardcore« oder »Hochzeit«. Everything is miscellaneous.

Bleibt für mich immer noch die Frage, welche Sprache wir verwenden sollen. Machen wir uns ein deutsches Schlagwortsystem und die Franzosen machen ihres und beide verstehen das der Amerikaner eventuell nicht und umgekehrt? Leider ist dieses kleine Fachgebiet der Typografie doch inzwischen sehr international.

I very much like your idea of breaking down classification of typefaces into tiers of descriptions. Catherine Dixon, I believe, poses a similar idea, though with variation. Her formal attributes category, seems related to your second category, yet her other categories, influence and patterns, are more historical in reference. (http://ow.ly/a42Qk)

In addition to incorporating structure elements into the classification scheme, I would also advocate for having a larger, more comprehensive glossary of descriptive terms, such as wedge serif, bracketed serif, teardrop terminal, ball terminal, etc. This way we, as students, could study and reference all of the different variations in letterform and become more familiar each in kind. This, I believe is similar to what you describe in your second tier. Yet, I find this information, at a detailed level, very hard to come by. Perhaps, like you say, it is because of conflict in terminology.

I would also agree with your suggestion to separate out levels of descriptive tags. I like the idea of separating structural descriptions from connotative ones. Connotative tags, though useful for those looking for a „fun, friendly“ typefaces, are changeable whereas structural descriptions are less volatile and less subjective. Structural tags could be established by designers or moderators of the database, where as connotative tags could be more user generated.

Yes, a comprehensive resource about terminology would be desirable. I am in regular communication with Nick Sherman about that, e.g. we did a session together about classification and terminology at the ATypI conference in Reykjavík. He is deeply absorbed in terminology and is working on a resource, a book or whatever form it might get in the end.

I was preparing an overview about the groups and connections I see and the kind of level of descriptives I have in mind. Hope to be able to post this later this week. I know Catherine’s work and regard it very interesting. It is more focussing on the particular application, the lettering record, and like you said, the historical connection, though.

Maybe it is impossible to create a scheme that is easy to understand for beginners and helpful to professionals at the same time. For the average use of the typeface it is not so crucial whether it has teardrop or straight terminals on the a. But the form model determines the general feel of the typeface, and whether it is legible under difficult circumstances.

Ich weiß nicht, ob Ihnen diese Studien schon bekannt sind, aber ich dachte ich steuere mal etwas aus meinen Recherchen bei:

Ein Zitat aus einer wissenschaftlichen Untersuchung von Anne Rose König:

„Ovinks Versuchspersonen wurden gebeten, einer großen Anzahl von Druckschriften bestimmte Adjektive zuzuordnen. Er kam zu dem Ergebnis, dass sich die Atmosphären der getesteten Druckschriften grob in drei Kategorien einteilen ließen: „luxury- refinement, economy-precision, strength“. Besonders betont wird bei dieser Untersuchung die Bedeutung der korrekten Zuordnung einer Schrift mit einem spezifischen Atmosphärenwert zum Inhalt des Gedruckten,59 da die emotionalen Reaktionen des Lesers großen Einfluss auf die individuelle Lesbarkeit des Textes, insbesondere auf die Lesegeschwindigkeit haben können.”

PDF: http://www.alles-buch.uni-erlangen.de/Koenig.pdf

Die Studie auf die sich König bezieht ist folgende: Ovink, G. W. Legibility, Atmosphere-Value and Forms of Printing Types. Leiden 1938.

Eine umfassende und aktuellere Umfrage zur Einschätzung der Leser von Schriften (leider nur Standardschriften des Web) findet sich auch noch bei Martin Liebig: http://www.designtagebuch.de/die-gefuehlte-lesbarkeit/2/

„Interessant aber ist durchaus, dass die ernsten Themenkomplexe Politik und Kultur anscheinend immer noch sehr stark mit klassisch geschnittener Typografie in Verbindung gebracht werden auf Konsumentenseite – bei keiner anderen Frage belegten serifentragende Schriftarten alle drei Stockerl-Plätze.”

Vielleicht ließe sich also das tagging-System nach vorangehenden, größeren Datenerhebungen auf einige Begriffe beschränken, oder gar wie bei Ovink und Liebig noch in zusätzliche Kategorien einteilen, die sich auf den Kontext / Konnotationen beziehen. Wobei hier ja immer das Problem besteht, dass diese ganzen ästhetischen Kategorien sehr schwammig sind. Wie sie schon geschrieben haben ist es eben notwendig die Tags auf verschiedene hierarchische Ebenen zu verteilen. Nicht jeder wird eine Schrift als „journalistisch” einschätzen … (irgendwie absurd). Ein anderes Problem ist eben, dass sich die ästhetische Einschätzung von Schriften ändert – müsste man diese Erhebungen alle Jahre wiederholen?

Zum sprachlichen Problem: das lässt sich doch bequem über Datenbanken lösen, vergleichbar mit Umrechnungstabellen, damit wie bei „Antique” keine Verwechslungen auftreten.

Eine weitere Leserbefragung findet sich noch bei Shaik, 2009 durchgeführt: http://www.surl.org/usabilitynews/112/typeface.asp

„This article presents results from a study investigating the personality of typefaces. Participants were asked to rate 40 typefaces (from serif, sans serif, display, and handwriting classes) using semantic differential scales. Responses are shown by typeface class and individual typeface using scaled scores.”